Attention Notes

paper: https://arxiv.org/pdf/1706.03762

cover: from anime “Code Geass Lelouch of the Rebellion”

Before the paper

Basically, Attention managed to model the attention of human eyes. It turn an input into vectors, representing the features in a higher dimension.

Attention

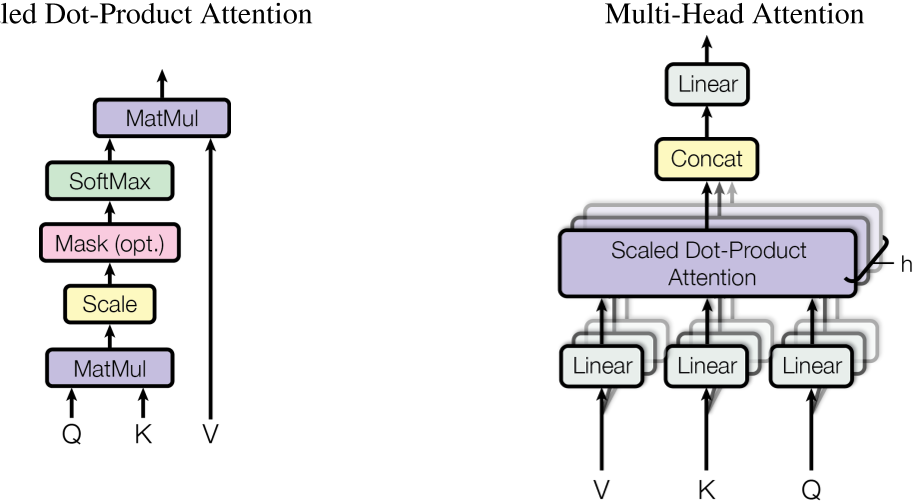

The operation of Attention can be depicted as the following equation:

Well, it is operation over the vector, Q, k, and V. Q is the query, K is the key and V are the values. Q dot-product K, the output was divided to scale its value, and was feeded into sofmax for regularization, following the multiplication with V. The overall output is the possibility of the reference of the attention, a weighted sum.

Model

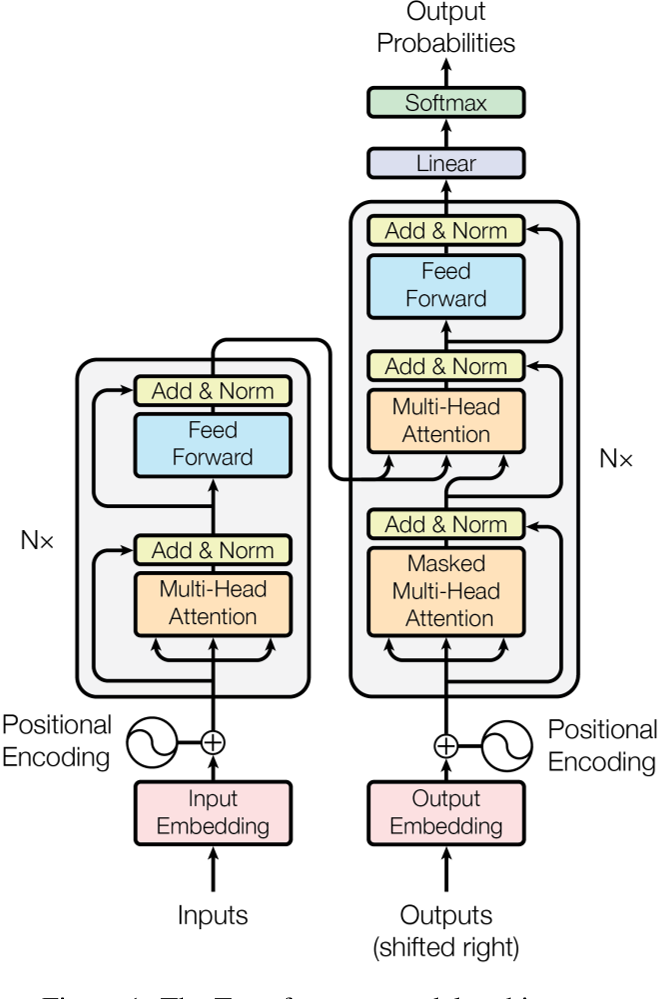

Transformer

It has an encoder-decoder structure, with the encoder mapping symbols

Encoder and Decoder

Encoder:

stack of 6 identical layers with 2 sublayers;

layer1: multi-head self-attention;

layer2: simple, position-wised fc;

each 2 layers conneced with residual connection;

output dimension:

Decoder:

stack of 6 identical layers with 2 sublayers, with a third sub-layer inserted, performing multi-head attention;

with residual connection, followed by layer normalization

Attention

Multi-Head Attention is the parallelization of Attenion

The two most commonly used attention functions are additive attention and dot-product attention. The latter is more efficient int both the speed and space.

Multi-Head Attention

Linearly project the queries, keys and values

The operation can be defined as:

Position-wise Feed-Forward Networks

identical fc applied to each position, with ReLU in between:

Embedding and Softmax

Learned embeddings were used to convert the inputs to

Positional Encoding

No recurrence and convolution is used. Positional encodings manage to make use of the order of the the sequence. The functions are:

Training

- Data: WMT 2014 English-German dataset, WMT 2014 English-French dataset

- Sentences were encoded using byte-pair encoding

- Sentence pairs were batched together by approximate sequence length. Each training batch contained a set of sentence pairs containing approximately 25000 source tokens and 25000 target tokens.

Hardware and Schedule

- Hardware: 8 NVIDIA P100 GPUs

- Time: 12 hours

Optimizer

Adam optimizer with

The learning rate changes over time:

Regularization

Three types of regularization:

- Residual Dropout

- Label Smoothing

Where is the third one?

- 标题: Attention Notes

- 作者: MelodicTechno

- 创建于 : 2024-09-04 22:54:30

- 更新于 : 2025-12-06 20:16:08

- 链接: https://melodictechno.github.io./2024/09/04/attention/

- 版权声明: 本文章采用 CC BY-NC-SA 4.0 进行许可。